WIP logging, benchmarking and monitoring

Alexander Diemand, Andreas Triantafyllos, and Neil Davies

2018-12-04

Aim

- combine logging and benchmarking

- add monitoring on top

- provide means for micro-benchmarks

- runtime configuration

- adaptable to needs of various stakeholders

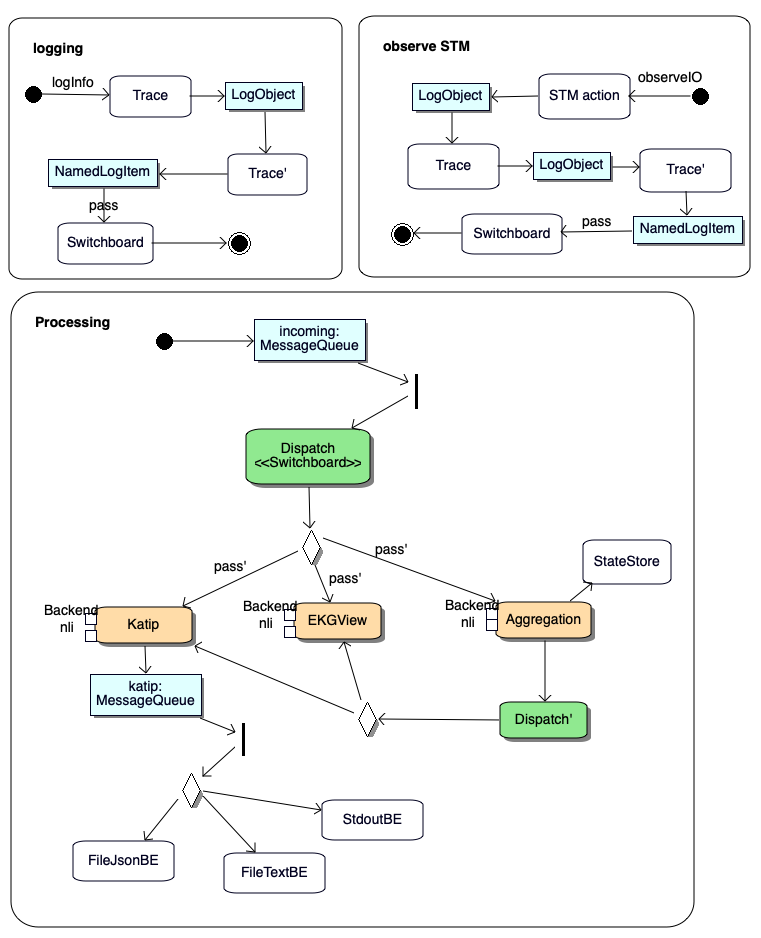

1 logging

Purpose: capture and output messages

- static message creation (Severity, String)

- logInfo "this is a message"

- non-blocking, low overhead - decoupled via message queue

- backend - routed in a statically programmed way

What if we want to drop some of the messages?

Or, increase the Severity of a particular message?

1.1 trace

- implemented as a Contravariant Trace (thanks to Alexander Vieth)

- not in monad stack, but passed as an argument

- logInfo logTrace "this is a message"

- New: we can build hierarchies

- captures call graph

- parents can decide how their child traces are processed

- runtime configurable

- each Trace has its own behaviour: NoTrace ..

- example in: Trace.subTrace

2 benchmarking

Purpose: observe outcomes and events, and prepare them for later analysis

- record events with exact timestamps

- currently: JSON format directly output to a file

- we will integrate this into our logging

What if we want to stop recording some of the events? Or, turn on others?

2.1 observables

Outcomes have a starting and terminating time point.

- bracket STM (Observer.STM) and Monad (Observer.Monad) actions

- record O/S metrics: Counters.Linux

- calculate rate of change, durations, ..

3 monitoring

Purpose: analyse messages and once above a threshold (frequency, value) trigger a cascade of alarms

- built on observables, outcomes, events

- processing the stream of them

- aggregates statistics

- tracking thresholds

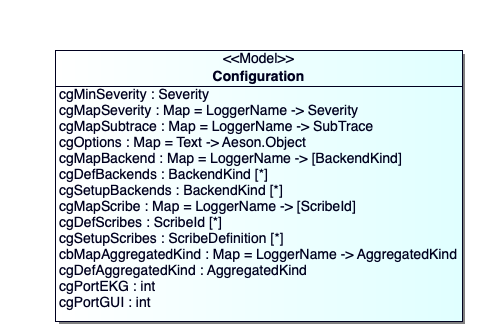

4 configuration

Purpose: change behaviour of LoBeMo in a named context

- changed at runtime

- redirects output (output selection)

- overwrites Severity (tbd)

- filters by Severity

- defines SubTrace

4.1 output selection

Redirection of log messages and observables to different outputs:

aggregation | EKG | Katip

4.1.1 information reduction

- Aggregation

- filtering: traceConditionally

4.1.2 EKG metrics view

- defined standard metrics

- our own metrics: labels and gauges

4.1.3 Katip log files

- katip based queue and scribes

- log rotation

5 ...

- requirements

- performance & security

- integration, PoC

5.1 #logging-requirements

- Support

- reduced size of logs

- automated log analysis

- Devops

- run core nodes

- monitoring

- Developers

- run unit/property testing

- micro-benchmarks

- QA testing & benchmarks

- run integration tests

- run (holistic) benchmarks

5.2 performance and security considerations

- how much does capturing of metrics cost?

- conditional compilation: can we exclude/disable benchmarking code from end-user products?

- high-end users (exchanges, enterprises)

- wallet users

5.3 integration

- enable micro-benchmarks in Cardano

- integration into node-shell

- PoC in ouroboros-network

8 project overview

>> projectURL <<

- literate Haskell (thanks to Andres for lhs2TeX)

- documentation of source code

- documentation of tests

- this presentation

- we still need help for:

- nix scripts

- buildkite CI setup