Prologue

The Catalyst-Core Documentation is currently undergoing review and re-write.

This documentation is published AS-IS. There is no guarantee that it is correct with regards to the current implementation. The source of all truth with regards to the implementation is the source code.

Patches to improve the documentation are very welcome. See Contributing.

Introduction

What is Catalyst-Core

Core Ledger

Welcome to the Jörmungandr User Guide.

Jörmungandr is a node implementation, written in rust, with the initial aim to support the Ouroboros type of consensus protocol.

A node is a participant of a blockchain network, continuously making, sending, receiving, and validating blocks. Each node is responsible to make sure that all the rules of the protocol are followed.

Mythology

Jörmungandr refers to the Midgard Serpent in Norse mythology. It is a hint to Ouroboros, the Ancient Egyptian serpent, who eat its own tail, as well as the IOHK paper on proof of stake.

General Concepts

This chapter covers the general concepts of the blockchain, and their application in the node, and is followed by the node organisation and the user interaction with it.

Blockchain concepts

Time

Slots represent the basic unit of time in the blockchain, and at each slot a block could be present.

Consecutive slots are grouped into epochs, which have updatable size defined by the protocol.

Fragments

Fragments are part of the blockchain data that represent all the possible events related to the blockchain health (e.g. update to the protocol), but also and mainly the general recording of information like transactions and certificates.

Blocks

Blocks represent the spine of the blockchain, safely and securely linking blocks in a chain, whilst grouping valid fragments together.

Blocks are composed of 2 parts:

- The header

- The content

The header link the content with the blocks securely together, while the content is effectively a sequence of fragments.

Blockchain

The blockchain is the general set of rules and the blocks that are periodically created. Some of the rules and settings, can be changed dynamically in the system by updates, while some other are hardcoded in the genesis block (first block of the blockchain).

+-------+ +-------+

|Genesis+<-----+Block 1+<--- ....

|Header | |Header |

+---+---+ +---+---+

| |

+---v---+ +---v---+

|Genesis| |Block 1|

|Content| |Content|

+-------+ +-------+

Consensus

The node currently support the following consensus protocol:

- Ouroboros BFT (OBFT)

- Ouroboros Genesis-Praos

Ouroboros BFT is a simple Byzantine Fault Tolerant (BFT) protocol where the block makers is a known list of leaders that successively create a block and broadcast it on the network.

Ouroboros Genesis Praos is a proof of stake (PoS) protocol where the block maker is made of a lottery where each stake pool has a chance proportional to their stake to be elected to create a block. Each lottery draw is private to each stake pool, so that the overall network doesn’t know in advance who can or cannot create blocks.

In Genesis-Praos slot time duration is constant, however the frequency of creating blocks is not stable, since the creation of blocks is a probability that is linked to the stake and consensus_genesis_praos_active_slot_coeff.

Note: In Genesis-Praos, if there is no stake in the system, no blocks will be created anymore starting with the next epoch.

Leadership

The leadership represent in abstract term, who are the overall leaders of the system and allow each individual node to check that specific blocks are lawfully created in the system.

The leadership is re-evaluated at each new epoch and is constant for the duration of an epoch.

Leader

Leader are an abstraction related to the specific actor that have the ability to create block; In OBFT mode, the leader just the owner of a cryptographic key, whereas in Genesis-Praos mode, the leader is a stake pool.

Transaction

Transaction forms the cornerstone of the blockchain, and is one type of fragment and also the most frequent one.

Transaction is composed of inputs and outputs; On one side, the inputs represent coins being spent, and on the other side the outputs represent coins being received.

Inputs Alice (80$) Bob (20$)

\ /

\ /

-----------

100$

---------

/ \

Outputs Charlie (50$) Dan (50$)

Transaction have fees that are defined by the blockchain settings and the following invariant hold:

\( \sum Inputs = \sum Outputs + fees \)

Transaction need to be authorized by each of the inputs in the transaction by their respective witness. In the most basic case, a witness is a cryptographic signature, but depending on the type of input can the type of witness vary.

Accounting

The blockchain has two methods of accounting which are interoperable:

- Unspent Transaction Output (UTXO)

- Accounts

UTXO behaves like cash/notes, and work like fixed denomination ticket that are cumulated. This is the accounting model found in Bitcoin. A UTXO is uniquely reference by its transaction ID and its index.

Accounts behaves like a bank account, and are simpler to use since exact amount can be used. This is the accounting model found in Ethereum. An account is uniquely identified by its public key.

Each inputs could refer arbitrarily to an account or a UTXO, and similarly each outputs could refer to an account or represent a new UTXO.

Network overview

Jörmungandr network capabilities are split into:

- the REST API, used for informational queries or control of the node;

- the gRPC API for blockchain protocol exchange and participation;

Here we will only review the gRPC API as the REST API is described in another chapter: go to the REST documentation

The protocol

The protocol is based on gRPC that combines commonly used protocols like HTTP/2 and RPC.

More precisely, Jörmungandr utilises.

This choice was made because gRPC is already widely supported around the world because

of it’s uitilization of standard protocols HTTP/2 which makes it much easier for Proxies and Firewalls to recognise

the protocol and permit the traffic.

Type of queries

The protocol allows you to send multiple types of messages between nodes:

- sync block to remote peer’s Last Block (

tip). - propose new fragments (new transactions, certificates, …): this is for the fragment propagation.

- propose new blocks: for block propagation.

There are other commands that optimise the communication and synchronization between nodes that will be documented here in the future.

Another type of messages is the Gossip message. These gossip messages allow Nodes to exchange

information (gossips) about other nodes on the network, allowing for peer

discovery.

Peer to peer

The peer 2 peer connections are established utilising multiple components:

- A multilayered topology (e.g. Poldercast);

- Gossiping for node discoverability;

- Subscription mechanism for event propagation;

- Security and countermeasures: (such as Topology Policy for scoring and/or blacklisting nodes);

Multilayered topology

As described in the Poldercast paper, our network topology is built on multiple layers that allow for granular control of it’s behavior. In practice this means a node will have different groups of nodes that it connects to based on different algorithms, each of these groups are a subset of the whole known list of nodes.

In short we have:

- The rings layer selects a predecessor(s) and a successor(s) for each topic (Fragment or Blocks);

- The Vicinity layer will select nodes that have similar interests;

- The Cyclon layer, will select nodes randomly.

However, we keep the option open to remove some of these layers or to add new ones, such as:

- A layer to allow privilege connections between stake pools;

- A layer for the user’s whitelist, a list of nodes the users considered trustworthy and that we could use to check in the current state of the network and verify the user’s node is not within a long running fork;

Gossiping

Gossiping is the process used for peer discovery. It allows two things:

- For any nodes to advertise themselves as discoverable;

- To discover new nodes via exchanging a list of nodes (gossips);

The gossips are selected by the different layers of the multilayered topology. For the Poldercast modules, the gossips are selected just as in the paper. Additional modules may select new nodes in the gossip list or may decide to not add any new information.

Subscription mechanism

Based on the multilayered topology, the node will open multiplexed and

bi-directional connections (thanks to industry standard gRPC, this comes for

free). These bi-directional connections are used to propagate events such as:

- Gossiping events, when 2 nodes exchange gossips for peer discovery;

- Fragment events, when a node wants to propagate a new fragment to other nodes;

- Block events, when a node wants to propagate a new block creation event

Security and countermeasures

In order to facilitate the handling of unreachable nodes or of misbehaving ones we have built a node policy tooling. Currently, we collect connectivity statuses for each node. The policy can then be tuned over the collected data to apply some parameters when connecting to a given node, as well as banning nodes from our topology.

For each node, the following data is collected:

Connection statuses:

- The failed connection attempts and when it happened;

- Latency

- Last message used per topic item (last time a fragment has been received from that node, last time a block has been received from that node…)

In the future, we may expand the polocy to include data collected at the blockchain level lile:

- Faults (e.g. trying to send an invalid block)

- Contributions in the network

- Their blockchain status (e.g. tips)

Policy

The p2p policy provides some more fine control on how to handle nodes flagged as not behaving as expected (see the list of data collected).

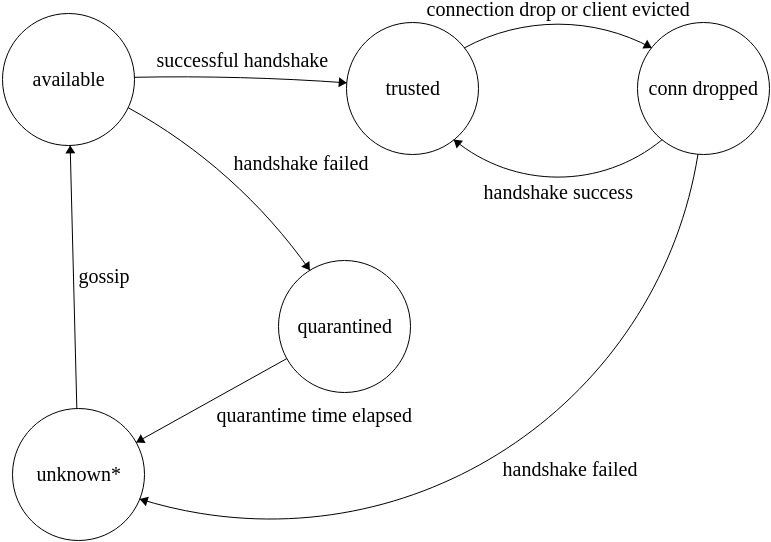

It currently works as a 4 levels: trusted, possible contact, quarantined, forgotten. Each gossip about a new node will create a new entry in the list of possible contact. Then the policy, based on the logged data associated to this node, may decide to put this node in quarantine for a certain amount of time.

Trusted nodes are the ones to which we were able to connect successfully. A connectivity report against those nodes will make them transition to the possible contact level, while a successful connection attempt will promote them again to trusted.

The changes from one level to another is best effort only. Applying the policy may be costly so the node applies the policy only on the node it is interested about (a gossip update or when reporting an issue against a node). This guarantees that the node does not spend too much time policing its database. And it also makes sure that only the nodes of interest are up to date. However it is possible for the node to choose, at a convenient time, to policy the whole p2p database. This is not enforced by the protocol.

| Disposition | Description |

|---|---|

| available | Node is available for the p2p topology for view selection and gossips. |

| quarantined | Node is not available for the p2p topology for view selection or gossips. After a certain amount of time, if the node is still being gossiped about, it will be moved to available. |

| forgotten | A node forgotten is simply removed from the whole p2p database. However, if the node is still being gossiped about it will be added back as available and the process will start again. |

Node organization

Secure Enclave

The secure enclave is the component containing the secret cryptographic material, and offering safe and secret high level interfaces to the rest of the node.

Network

The node’s network is 3 components:

- Intercommunication API (GRPC)

- Public client API (REST)

- Control client API (REST)

More detailed information here

Intercommunication API (GRPC)

This interface is a binary, efficient interface using the protobuf format and GRPC standard. The protobuf files of types and interfaces are available in the source code.

The interface is responsible to communicate with other node in the network:

- block sending and receiving

- fragments (transaction, certificates) broadcast

- peer2peer gossip

Public API REST

This interface is for simple queries for clients like:

- Wallet Client & Middleware

- Analytics & Debugging tools

- Explorer

it’s recommended for this interface to not be opened to the public.

TODO: Add a high level overview of what it does

Control API REST

This interface is not finished, but is a restricted interface with ACL, to be able to do maintenance tasks on the process:

- Shutdown

- Load/Retire cryptographic material

TODO: Detail the ACL/Security measure

Stake

In a proof of stake, participants are issued a stake equivalent to the amount of coins they own. The stake is then used to allow participation in the protocol, simply explained as:

The more stake one has, the more likely one will participate in the good health of the network.

When using the BFT consensus, the stake doesn’t influence how the system runs, but stake can still be manipulated for a later transition of the chain to another consensus mode.

Stake in the Account Model

Account are represented by 1 type of address and are just composed of a public key. The account accumulate moneys and its stake power is directly represented by the amount it contains

For example:

A - Account with 30$ => Account A has stake of 30

B - Account with 0$ => Account B has no stake

The account might have a bigger stake than what it actually contains, since it could also have associated UTXOs, and this case is covered in the next section.

Stake in the UTXO Model

UTXO are represented by two kind of addresses:

- single address: those type of address have no stake associated

- group address: those types of address have an account associated which receive the stake power of the UTXOs value

For example with the following utxos:

UTXO1 60$ (single address) => has stake of 0

UTXO2 50$ (group address A) \

->- A - Account with 10$ => Account A has stake of 100

UTXO3 40$ (group address A) /

UTXO4 20$ (group address B) -->- B - Account with 5$ => Account B has stake of 25

Stake pool

Stake pool are the trusted block creators in the genesis-praos system. A pool is declared on the network explicitly by its owners and contains, metadata and cryptographic material.

Stake pool has no stake power on their own, but participants in the network delegate their stake to a pool for running the operation.

Stake Delegation

Stake can and need to be delegated to stake pool in the system. They can change over time with a publication of a new delegation certificate.

Delegation certificate are a simple declaration statement in the form of:

Account 'A' delegate to Stake Pool 'Z'

Effectively it assigns the stake in the account and its associated UTXO stake to the pool it delegates to until another delegation certificate is made.

Quickstart

The rust node comes with tools and help in order to quickly start a node and connect to the blockchain.

It is compatible with most platforms and it is pre-packaged for some of them.

Here we will see how to install jormungandr and its helper jcli

and how to connect quickly to a given blockchain.

There are three posible ways you can start jormungandr.

As a passive node in an existing network

As described here.

The passive Node is the most common type of Node on the network. It can be used to download the blocks and broadcast transactions to peers. However, it doesn’t have cryptographic materials or any mean to create blocks. This type of nodes are mostly used for wallets, explorers or relays.

As a node generating blocks in an existing network

The network could be running either bft or genesis consensus. In the former case the node must have the private key of a registered as a slot leader. For the latter the private keys of a registered stake pool are needed.

More information here

Creating your own network

This is similar to the previous case, but configuring a genesis file is needed. Consult the Advanced section for more information on this procedure.

Command line tools

The software is bundled with 2 different command line software:

- jormungandr: the node;

- jcli: Jörmungandr Command Line Interface, the helpers and primitives to run and interact with the node.

Installation

From a release

This is the recommended method. Releases are all available here.

From source

Jörmungandr’s code source is available on github. Follow the instructions to build the software from sources.

Help and auto completion

All commands come with usage help with the option --help or -h.

For jcli, it is possible to generate the auto completion with:

jcli auto-completion bash ${HOME}/.bash_completion.d

Supported shells are:

- bash

- fish

- zsh

- powershell

- elvish

Note:

Make sure ${HOME}/.bash_completion.d directory previously exists on your HD.

In order to use auto completion you still need to:

source ${HOME}/.bash_completion.d/jcli.bash

You can also put it in your ${HOME}/.bashrc.

Starting a passive node

In order to start the node, you first need to gather the blockchain information you need to connect to.

- the hash of the genesis block of the blockchain, this will be the source of truth of the blockchain. It is 64 hexadecimal characters.

- the trusted peers identifiers and access points.

These information are essentials to start your node in a secure way.

The genesis block is the first block of the blockchain. It contains the static parameters of the blockchain as well as the initial funds. Your node will utilise the Hash to retrieve it from the other peers. It will also allows the Node to verify the integrity of the downloaded genesis block.

The trusted peers are the nodes in the public network that your Node will trust in order to initialise the Peer To Peer network.

The node configuration

Your node configuration file may look like the following:

Note

This config shouldn’t work as it is, the ip address and port for the trusted peer should be those of an already running node. Also, the public_address (‘u.x.v.t’) should be a valid address (you can use an internal one, eg: 127.0.0.1). Furthermore, you need to have permission to write in the path specified by the storage config.

storage: "/mnt/cardano/storage"

rest:

listen: "127.0.0.1:8443"

p2p:

trusted_peers:

- address: "/ip4/104.24.28.11/tcp/8299"

id: ad24537cb009bedaebae3d247fecee9e14c57fe942e9bb0d

Description of the fields:

storage: (optional) Path to the storage. If omitted, the blockchain is stored in memory only.log: (optional) Logging configuration:level: log messages minimum severity. If not configured anywhere, defaults to “info”. Possible values:- “off”

- “critical”

- “error”

- “warn”

- “info”

- “debug”

- “trace”

format: Log output format,plainorjson.output: Log output destination. Possible values are:stdout: standard outputstderr: standard errorsyslog: syslog (only available on Unix systems)syslogudp: remote syslog (only available on Unix systems)host: address and port of a syslog serverhostname: hostname to attach to syslog messages

journald: journald service (only available on Linux with systemd, (if jormungandr is built with thesystemdfeature)gelf: Configuration fields for GELF (Graylog) network logging protocol (if jormungandr is built with thegelffeature):backend: hostname:port of a GELF serverlog_id: identifier of the source of the log, for thehostfield in the messages.

file: path to the log file.

rest: (optional) Configuration of the REST endpoint.listen: address:port to listen for requeststls: (optional) enables TLS and disables plain HTTP if providedcert_file: path to server X.509 certificate chain file, must be PEM-encoded and contain at least 1 itempriv_key_file: path to server private key file, must be PKCS8 with single PEM-encoded, unencrypted key

cors: (optional) CORS configuration, if not provided, CORS is disabledallowed_origins: (optional) allowed origins, if none provided, echos request originmax_age_secs: (optional) maximum CORS caching time in seconds, if none provided, caching is disabled

p2p: P2P network settingstrusted_peers: (optional) the list of nodes’s multiaddr with their associatedpublic_idto connect to in order to bootstrap the P2P topology (and bootstrap our local blockchain);public_id: (optional) the node’s public ID that will be used to identify this node to the network.public_address: multiaddr string specifying address of the P2P service. This is the public address that will be distributed to other peers of the network that may find interest in participating to the blockchain dissemination with the node.listen: (optional) address:port to specifies the address the node will listen to to receive p2p connection. Can be left empty and the node will listen to whatever value was given topublic_address.topics_of_interest: The dissemination topics this node is interested to hear about:messages: Transactions and other ledger entries. Typical setting for a non-mining node:low. For a stakepool:high;blocks: Notifications about new blocks. Typical setting for a non-mining node:normal. For a stakepool:high.

max_connections: The maximum number of simultaneous P2P connections this node should maintain.

explorer: (optional) Explorer settingsenabled: True or false

no_blockchain_updates_warning_interval: (optional, seconds) if no new blocks were received after this period of time, the node will start sending you warnings in the logs.

Starting the node

jormungandr --config config.yaml --genesis-block-hash 'abcdef987654321....'

The ‘abcdef987654321….’ part refers to the hash of the genesis. This should be given to you from one of the peers in the network you are connecting to.

In case you have the genesis file (for example block-0.bin, because you are creating the network) you can get this hash with jcli.

jcli genesis hash --input block-0.bin

or, in case you only have the yaml file

jcli genesis encode --input genesis.yaml | jcli genesis hash

REST Api

It is possible to query the node via its REST Interface.

In the node configuration, you have set something like:

# ...

rest:

listen: "127.0.0.1:8443"

#...

This is the REST endpoint to talk to the node, to query blocks or send transaction.

It is possible to query the node stats with the following end point:

curl http://127.0.0.1:8443/api/v0/node/stats

The result may be:

{"blockRecvCnt":120,"txRecvCnt":92,"uptime":245}

THE REST API IS STILL UNDER DEVELOPMENT

Please note that the end points and the results may change in the future.

To see the whole Node API documentation:

Explorer mode

The node can be configured to work as a explorer. This consumes more resources, but makes it possible to query data otherwise not available.

Configuration

There are two ways of enabling the explorer api.

It can either be done by passing the --enable-explorer flag on the start arguments or by the config file:

explorer:

enabled: true

CORS

For configuring CORS the explorer API, this needs to be done on the REST section of the config, as documented here.

API

A graphql interface can be used to query the explorer data. When enabled, two endpoints are available in the REST interface:

/explorer/graphql/explorer/playground

The first is the one that queries are made against, for example:

curl \

-X POST \

-H "Content-Type: application/json" \

--data '{'\

'"query": "{'\

' status {'\

' latestBlock {'\

' chainLength'\

' id'\

' previousBlock {'\

' id'\

' }'\

' }'\

' }'\

'}"'\

'}' \

http://127.0.0.1:8443/explorer/graphql

While the second serves an in-browser graphql IDE that can be used to try queries interactively.

How to start a node as a leader candidate

Gathering data

Like in the passive node case, two things are needed to connect to an existing network

- the hash of the genesis block of the blockchain, this will be the source of truth of the blockchain. It is 64 hexadecimal characters.

- the trusted peers identifiers and access points.

The node configuration could be the same as that for running a passive node.

There are some differences depending if you are connecting to a network running a genesis or bft consensus protocol.

Connecting to a genesis blockchain

Registering a stake pool

In order to be able to generate blocks in an existing genesis network, a registered stake pool is needed.

Creating the secrets file

Put the node id and private keys in a yaml file in the following way:

Example

filename: node_secret.yaml

genesis:

sig_key: Content of stake_pool_kes.prv file

vrf_key: Content of stake_pool_vrf.prv file

node_id: Content of stake_pool.id file

Starting the Genesis node

jormungandr --genesis-block-hash asdf1234... --config config.yaml --secret node_secret.yaml

The ‘asdf1234…’ part should be the actual block0 hash of the network.

Connecting to a BFT blockchain

In order to generate blocks, the node should be registered as a slot leader in the network and started in the following way.

The secret file

Put secret key in a yaml file, e.g. node_secret.yaml as follows:

bft:

signing_key: ed25519_sk1kppercsk06k03yk4qgea....

where signing_key is a private key associated to the public id of a slot leader.

Starting the BFT node

jormungandr --genesis-block asdf1234... --config node.config --secret node_secret.yaml

The ‘asdf1234…’ part should be the actual block0 hash of the network.

Configuration

This chapter covers the node documentation, necessary to have a working system. It covers the network, logging and storage parameters.

Node Configuration

This is an common example of a Jörmungandr node configuration file typically named node-config.yaml.

However your’s will vary depending on your needs.

Additionally, this configuration has been tested on a specific Jörmungandr version and may change with newer versions.

It’s important to keep in mind that the trusted_peers portion of this configuration will be different for each Cardano blockchain network.

If you’re trying to connect this node to a specific network, you need to know:

- its genesis block hash

- its associated list of trusted peers.

Example Configuration - 1:

---

log:

output: stderr

level: info

format: plain

http_fetch_block0_service:

- https://url/jormungandr-block0/raw/master/data

skip_bootstrap: false # If set to true - will skip the bootstrapping phase

bootstrap_from_trusted_peers: false

p2p:

public_address: "/ip4/X.X.X.X/tcp/Y" # This should match your public IP address (X) and port number (Y)

#listen: 0.0.0.0:Y

topics_of_interest:

blocks: normal # Default is normal - set to high for stakepool

messages: low # Default is low - set to high for stakepool

allow_private_addresses: false

max_connections: 256

max_client_connections: 192

gossip_interval: 10s

max_bootstrap_attempts: # Default is not set

trusted_peers:

- address: "/ip4/13.230.137.72/tcp/3000"

id: e4fda5a674f0838b64cacf6d22bbae38594d7903aba2226f

- address: "/ip4/13.230.48.191/tcp/3000"

id: c32e4e7b9e6541ce124a4bd7a990753df4183ed65ac59e34

- address: "/ip4/18.196.168.220/tcp/3000"

id: 74a9949645cdb06d0358da127e897cbb0a7b92a1d9db8e70

- address: "/ip4/3.124.132.123/tcp/3000"

id: 431214988b71f3da55a342977fea1f3d8cba460d031a839c

- address: "/ip4/18.184.181.30/tcp/3000"

id: e9cf7b29019e30d01a658abd32403db85269fe907819949d

- address: "/ip4/184.169.162.15/tcp/3000"

id: acaba9c8c4d8ca68ac8bad5fe9bd3a1ae8de13816f40697c

- address: "/ip4/13.56.87.134/tcp/3000"

id: bcfc82c9660e28d4dcb4d1c8a390350b18d04496c2ac8474

policy:

quarantine_duration: 30m

quarantine_whitelist:

- "/ip4/13.230.137.72/tcp/3000"

- "/ip4/13.230.48.191/tcp/3000"

- "/ip4/18.196.168.220/tcp/3000"

layers:

preferred_list:

view_max: 20

peers:

- address: "/ip4/13.230.137.72/tcp/3000"

id: e4fda5a674f0838b64cacf6d22bbae38594d7903aba2226f

- address: "/ip4/13.230.48.191/tcp/3000"

id: c32e4e7b9e6541ce124a4bd7a990753df4183ed65ac59e34

- address: "/ip4/18.196.168.220/tcp/3000"

id: 74a9949645cdb06d0358da127e897cbb0a7b92a1d9db8e70

rest:

listen: 127.0.0.1:3100

storage: "./storage"

explorer:

enabled: false

mempool:

pool_max_entries: 100000

log_max_entries: 100000

leadership:

logs_capacity: 1024

no_blockchain_updates_warning_interval: 15m

Note: The node configuration uses the YAML format.

Advanced

Rewards report

Starting the node jormungandr with the command line option --rewards-report-all will

collect a thorough report of all the reward distribution. It can then be accessed via the

REST endpoints /api/v0/rewards/history/1 or /api/v0/rewards/epoch/10.

this is not a recommended setting as it may take memory and may trigger some latency.

Handling of time-consuming transactions

By default we allow a single transaction to delay a block by 50 slots. This can

be changed by adjusting the block_hard_deadline setting.

The following is deprecated and will be removed

If you want to record the reward distributions in a directory it is possible to set

the environment variable: JORMUNGANDR_REWARD_DUMP_DIRECTORY=/PATH/TO/DIR/TO/WRITE/REWARD.

If an error occurs while dumping the reward, the node will panic with an appropriate error message.

Leadership

The leadership field in your node config file is not mandatory, by default it is set

as follow:

leadership:

logs_capacity: 1024

logs_capacity: the maximum number of logs to keep in memory. Once the capacity is reached, older logs will be removed in order to leave more space for new ones [default: 1024]

Logging

The following options are available in the log section:

-

level: log messages minimum severity. If not configured anywhere, defaults toinfo. Possible values:off,critical,error,warn,info,debug,trace -

format: Log output format,plainorjson -

output: Log output destination (multiple destinations are supported). Possible values are:stdout: standard outputstderr: standard errorjournald: journald service (only available on Linux with systemd, (if jormungandr is built with thesystemdfeature)gelf: Configuration fields for GELF (Graylog) network logging protocol (if jormungandr is built with thegelffeature):backend: hostname:port of a GELF serverlog_id: identifier of the source of the log, for thehostfield in the messages

file: path to the log file

Example

A single configurable backend is supported.

Output to stdout

log:

output: stdout

level: trace

format: plain

Output to a file

log:

output:

file: example.log

level: info

format: json

Mempool

When running an active node (BFT leader or stake pool) it is interesting to be able to make choices on how to manage the pending transactions: how long to keep them, how to prioritize them etc.

The mempool field in your node config file is not mandatory, by default it is set

as follow:

mempool:

pool_max_entries: 10000

log_max_entries: 100000

pool_max_entries: (optional, default is 10000). Set a maximum size of the mempoollog_max_entries: (optional, default is 100000). Set a maximum size of fragment logspersistent_log: (optional, disabled by default) log all incoming fragments to log files, rotated on a hourly basis. The value is an object, with thedirfield specifying the directory name where log files are stored.

Persistent logs

A persistent log is a collection of records comprised of a UNIX timestamp of when a fragment was registereed by the mempool followed by the hex-encoded fragment body. This log is a line-delimited JSON stream.

Keep in mind that enabling persistent logs could result in impaired performance of the node if disk operations are slow. Consider using a reasonably fast ssd for best results.

Node network

There are 2 different network interfaces which are covered by their respective section:

rest:

...

p2p:

...

REST interface configuration

listen: listen addresstls: (optional) enables TLS and disables plain HTTP if providedcert_file: path to server X.509 certificate chain file, must be PEM-encoded and contain at least 1 itempriv_key_file: path to server private key file, must be PKCS8 with single PEM-encoded, unencrypted key

cors: (optional) CORS configuration, if not provided, CORS is disabledallowed_origins: (optional) allowed origins, if none provided, echos request origin, note that an origin should include a scheme, for example:http://127.0.0.1:8080.max_age_secs: (optional) maximum CORS caching time in seconds, if none provided, caching is disabled

Configuring TLS

In order to enable TLS there must be provided certificate and private key files.

jcli TLS requirements

Note that jormungandr itself does not have any specific requirements for TLS certificates and you

may give whatever you want including self-signed certificates as long as you do not intend to use

jcli.

The cryptography standards used by jcli as well as by all modern browsers and many http clients

place the following requirements on certificates:

- A certificate should adhere to X.509 v3 with appropriate key usage settings and subject alternative name.

- A certificate must not be self-signed.

Given that, your options are to either get a certificate from a well-known CA (Let’s Encrypt will

do, jcli uses Mozilla’s CA bundle for verification) or create your own local CA and provide the

root certificate to jcli via the --tls-cert-path option.

Creating a local CA using OpenSSL and EasyRSA

EasyRSA is a set of scripts that use OpenSSL and give you an easier experience with setting up your local CA. You can download them here.

-

Go to

easy-rsa/easy-rsa3. -

Configure your CA. To do that, create the configuration file (

cp vars.example vars); open it with the text editor of your choise (for example,vim vars); uncomment and edit fields you need to change. Each CA needs to edit these lines (find then in yourvarsfile according to their organization structure:#set_var.EASYRSA_REQ_COUNTRY––“US” #set_var.EASYRSA_REQ_PROVINCE—“California” #set_var.EASYRSA_REQ_CITY—“San.Francisco” #set_var.EASYRSA_REQ_ORG––“Copyleft.Certificate.Co” #set_var.EASYRSA_REQ_EMAIL–“me@example.net” #set_var.EASYRSA_REQ_OU—–“My.Organizational.Unit”

-

When your configuration is ready, run

./easyrsa init-pkiand./easyrsa build-ca nopass. You will be prompted to set the name of your CA. -

Run

./easyrsa gen-req server nopassto create a new private key and a certificate signing request. You will be prompted to enter the host name (localhostfor local testing). -

Run

./easyrsa sign-req server serverto sign the request.

To use the generated certificate, use it and the corresponding key in your jormungandr config:

rest:

tls:

cert_file: <path to server.crt>

priv_key_file: <path to server.key>

Use the CA certificate with jcli.

P2P configuration

trusted_peers: (optional) the list of nodes’ multiaddr to connect to in order to bootstrap the p2p topology (and bootstrap our local blockchain). Note that you can use a DNS name in the following format:/dns4/node.example.com/tcp/3000. Usedns6instead ofdns4if you want the peer to connect with IPv6.public_address: multiaddr the address to listen from and accept connection from. This is the public address that will be distributed to other peers of the network that may find interest into participating to the blockchain dissemination with the node. Currently only TCP is supported.node_key_file: (optional) Path to a file containing a bech32-encoded ed25519 secret key. The keys are used to advertize the node in network gossip and to authenticate a connection to the node if the node is used as a trusted peer. Most of the users don’t need to set this value as the key will be randomly generated if the option is not present.listen: (optional) socket address (IP address and port separated by a comma), specifies the interface address and port the node will listen at to receive p2p connection. Can be left empty and the node will listen to whatever value was given topublic_address.topics_of_interest: (optional) the different topics we are interested to hear about:messages: notify other peers this node is interested about Transactions typical setting for a non mining node:"low". For a stakepool:"high";blocks: notify other peers this node is interested about new Blocks. typical settings for a non mining node:"normal". For a stakepool:"high".

max_connections: the maximum number of P2P connections this node should maintain. If not specified, an internal limit is used by default[default: 256]max_client_connections: the maximum number of client P2P connections this node should keep open.[default: 192]policy: (optional) set the setting for the policy modulequarantine_durationset the time to leave a node in quarantine before allowing it back (or not) into the fold. It is recommended to leave the default value[default: 30min].quarantine_whitelistset a trusted list of peers that will not be quarantined in any circumstance. It should be a list of valid addresses, for example:["/ip4/127.0.0.1/tcp/3000"]. By default this list is empty,[default: []].

layers: (optional) set the settings for some of the poldercast custom layers (see below)gossip_interval: (optional) interval to start gossiping with new nodes, changing the value will affect the bandwidth. The more often the node will gossip the more bandwidth the node will need. The less often the node gossips the less good the resilience to node churn.[default: 10s]network-stuck_check: (optional) If no gossip has been received in the last interval, try to connect to nodes that were previously known to this node. This helps to rejoin the protocol in case there is a network outage and the node cannot reach any other peer.[default: 5min]max_bootstrap_attempts: (optional) number of times to retry bootstrapping from trusted peers. If not set, default behavior, the bootstrap process will keep retrying indefinitely, until completed successfully. If set to 0 (zero), the node will skip bootstrap all together – even if trusted peers are defined. If the node fails to bootstrap from any of the trusted peers and the number of bootstrap retry attempts is exceeded, then the node will continue to run without completing the bootstrap process. This will allow the node to act as the first node in the p2p network (i.e. genesis node), or immediately begin gossip with the trusted peers if any are defined.

The trusted peers

The trusted peers is a concept that is not fully implemented yet. One of the key element for now is that this is the first node any node tries to connect in order to meet new nodes. Right now, as far as we know, only one of them is needed. IOHK provides a few others for redundancy.

Layers

Jörmungandr provides multiple additional layers to the poldercast default ones:

the preferred list or the bottle in the sea.

Preferred list

This is a special list that allows to connect multiple nodes together without relying on the auto peer discovery. All entries in the preferred list are also whitelisted automatically, so they cannot be quarantined.

configuration

view_max: this is the number of entries to show in the view each round the layer will randomly select up toview_maxentries from the whole preferred_list.peers list of entries. [default: 20]peers: the list of peers to keep in the preferred list [default: EMPTY]

Also, the preferred list will never be quarantined or blacklisted, the node will

attempt to connect to (up to view_max of) these nodes every time, even if some

are down, unreachable or not operated anymore.

COMPATIBILITY NOTE: in near future the peer list will be only a list of addresses and the ID part will not be necessary.

Example

p2p:

layers:

preferred_list:

view_max: 20

peers:

- address: '/ip4/127.0.0.1/tcp/2029'

id: 019abc...

- ...

Setting the public_id

This is needed to advertise your node as a trusted peer.

If not set, the node will generate a random ID, which is fine for a regular user.

You can generate a public id with openssl, for example: openssl rand -hex 24

topics_of_interest

This is an optional value to set. The default is:

messages: low

blocks: normal

These values make sense for most of the users that are not running stake pools or that are not even publicly reachable.

However for a publicly reachable node, the recommended settings would be:

messages: normal

blocks: normal

and for a stake pool:

messages: high

blocks: high

Prometheus

Prerequisites

To use Prometheus you need Jormungandr compiled with the prometheus-metrics feature enabled.

Usage

To enable Prometheus endpoint you need to enable it in the configuration file:

prometheus:

enabled: true

Alternatively, you can use the --prometheus-metrics flag.

When enabled, the Prometheus endpoint is exposed as http(s)://<API_ADDR>:<API_PORT>/prometheus.

jcli

This is the node command line helper. It is mostly meant for developers and stake pool operators. It allows offline operations:

- generating cryptographic materials for the wallets and stake pools;

- creating addresses, transactions and certificates;

- prepare a new blockchain

and it allows simple interactions with the node:

- query stats;

- send transactions and certificates;

- get raw blocks and UTxOs.

Address

Jormungandr comes with a separate CLI to create and manipulate addresses.

This is useful for creating addresses from their components in the CLI, for debugging addresses and for testing.

Display address info

To display an address and verify it is in a valid format you can utilise:

$ jcli address info ta1svy0mwwm7mdwcuj308aapjw6ra4c3e6cygd0f333nvtjzxg8ahdvxlswdf0

discrimination: testing

public key: ed25519e_pk1pr7mnklkmtk8y5tel0gvnksldwywwkpzrt6vvvvmzus3jpldmtpsx9rnmx

or for example:

$ jcli address \

info \

ca1qsy0mwwm7mdwcuj308aapjw6ra4c3e6cygd0f333nvtjzxg8ahdvxz8ah8dldkhvwfghn77se8dp76uguavzyxh5cccek9epryr7mkkr8n7kgx

discrimination: production

public key: ed25519_pk1pr7mnklkmtk8y5tel0gvnksldwywwkpzrt6vvvvmzus3jpldmtpsx9rnmx

group key: ed25519_pk1pr7mnklkmtk8y5tel0gvnksldwywwkpzrt6vvvvmzus3jpldmtpsx9rnmx

Creating an address

Each command following allows to create addresses for production and testing

chains. For chains, where the discrimination is testing, you need to

use the --testing flag.

There’s 3 types of addresses:

- Single address : A simple spending key. This doesn’t have any stake in the system

- Grouped address : A spending key attached to an account key. The stake is automatically

- Account address : An account key. The account is its own stake

Address for UTxO

You can create a single address (non-staked) using the spending public key for this address utilising the following command:

$ jcli address \

single ed25519e_pk1jnlhwdgzv3c9frknyv7twsv82su26qm30yfpdmvkzyjsdgw80mfqduaean

ca1qw207ae4qfj8q4yw6v3ned6psa2r3tgrw9u3y9hdjcgj2p4pcaldyukyka8

To add the staking information and make a group address, simply add the account public key as a second parameter of the command:

$ jcli address \

single \

ed25519_pk1fxvudq6j7mfxvgk986t5f3f258sdtw89v4n3kr0fm6mpe4apxl4q0vhp3k \

ed25519_pk1as03wxmy2426ceh8nurplvjmauwpwlcz7ycwj7xtl9gmx9u5gkqscc5ylx

ca1q3yen35r2tmdye3zc5lfw3x992s7p4dcu4jkwxcda80tv8xh5ym74mqlzudkg42443nw08cxr7e9hmcuzals9ufsa9uvh723kvteg3vpvrcxcq

Address for Account

To create an account address you need the account public key and run:

$ jcli address \

account ed25519_pk1c4yq3hflulynn8fef0hdq92579n3c49qxljasrl9dnuvcksk84gs9sqvc2

ca1qhz5szxa8lnujwva8997a5q42nckw8z55qm7tkq0u4k03nz6zc74ze780qe

changing the address prefix

You can decide to change the address prefix, allowing you to provide more enriched data to the user. However, this prefix is not forwarded to the node, it is only for UI/UX.

$ jcli address \

account \

--prefix=address_ \

ed25519_pk1yx6q8rsndawfx8hjzwntfs2h2c37v5g6edv67hmcxvrmxfjdz9wqeejchg

address_1q5smgquwzdh4eyc77gf6ddxp2atz8ej3rt94nt6l0qes0vexf5g4cw68kdx

Certificate

Tooling for offline transaction creation

Building stake pool registration certificate

Builds a stake pool registration certificate.

jcli certificate new stake-pool-registration \

--vrf-key <vrf-public-key> \

--kes-key <kes-public-key> \

--start-validity <seconds-since-start> \

--management-threshold <THRESHOLD> \

--owner <owner-public-key> \

[--operator <operator-public-key>] \

[<output-file>]

Where:

--operator <operator-public-key>- optional, public key of the operator(s) of the pool.output-file- optional, write the output to the given file or print it to the standard output if not defined

Retiring a stake pool

It is possible to retire a stake pool from the blockchain. By doing so the stake delegated to the stake pool will become dangling and will need to be re-delegated.

Remember though that the action won’t be applied until the next following epoch. I.e. the certificate will take a whole epoch before being applied, this should leave time for stakers to redistribute their stake to other pools before having their stake becoming dangling.

It might be valuable for a stake pool operator to keep the stake pool running until the stake pool retirement certificate is fully applied in order to not miss any potential rewards.

example:

jcli certificate new stake-pool-retirement \

--pool-id <STAKE_POOL_ID> \

--retirement-time <seconds-since-start> \

[<output-file>]

where:

output-file- optional, write the output to the given file or print it to the standard output if not defined.--retirement-time- is the number of seconds since the start in order to make the stake pool retire.0means as soon as possible.--pool-id- hex-encoded stake pool ID. Can be retrieved usingjcli certificate get-stake-pool-idcommand. See here for more details.

Building stake pool delegation certificate

Builds a stake pool delegation certificate.

jcli certificate new stake-delegation <STAKE_KEY> <STAKE_POOL_IDS> [--output <output-file>]

Where:

-o, --output <output-file>- optional, write the output to the given file or print it to the standard output if not defined<STAKE_KEY>- the public key used in the stake key registration<STAKE_POOL_IDS>...- hex-encoded stake pool IDs and their numeric weights in format “pool_id:weight”. If weight is not provided, it defaults to 1.

Building update proposal certificate

Builds an update proposal certificate.

jcli certificate new update-proposal \

<PROPOSER_ID> \

<CONFIG_FILE> \

[<output-file>]

Where:

- <PROPOSER_ID> - the proposer ID, public key of the one who will sign this certificate

- <CONFIG_FILE> - optional, the file path to the config file defining the config param changes. If omitted it will be read from the standard input.

output-file- optional, write the output to the given file or print it to the standard output if not defined

For example your config file may look like:

# The block0-date defines the date the blockchain starts

# expected value in seconds since UNIX_EPOCH

#

# By default the value will be the current date and time. Or you can

# add a specific time by entering the number of seconds since UNIX

# Epoch

- Block0Date: 17

# This is the type of discrimination of the blockchain

# if this blockchain is meant for production then

# use 'production' otherwise use 'test'.

- Discrimination: test

# The initial consensus version:

#

# * BFT consensus: bft

# * Genesis Praos consensus: genesis

- ConsensusVersion: bft

# Number of slots in each epoch.

- SlotsPerEpoch: 42

# The slot duration, in seconds, is the time between the creation

# of 2 blocks

- SlotDuration: 79

# Epoch stability depth

- EpochStabilityDepth: 12

# Genesis praos active slot coefficient

# Determines minimum stake required to try becoming slot leader, must be in range (0,1]

- ConsensusGenesisPraosActiveSlotsCoeff: "0.004"

# This is the size, in bytes, of all the contents of the block (excluding the

# block header).

- BlockContentMaxSize: 96

# Add a new bft leader

- AddBftLeader: ed25519_pk1g53asm6l4gcwk2pm5ylr092umaur5yes47rqv7ng5yl525x8g8mq5nk7x7

# Remove a bft leader

- RemoveBftLeader: ed25519_pk1a3sjcg6gt4d05k5u6uqyzmsap8cjw37ul9cgztz8m697lvkz26uqg49nm3

# The fee calculations settings

#

# total fees: constant + (num_inputs + num_outputs) * coefficient [+ certificate]

- LinearFee:

# this is the minimum value to pay for every transaction

constant: 57

# the additional fee to pay for every inputs and outputs

coefficient: 14

# the additional fee to pay if the transaction embeds a certificate

certificate: 95

# (optional) fees for different types of certificates, to override the one

# given in `certificate` just above

#

# here: all certificate fees are set to `4` except for pool registration

# and stake delegation which are respectively `5` and `2`.

per_certificate_fees:

# (optional) if not specified, the pool registration certificate fee will be

# the one set by linear_fees.certificate

certificate_pool_registration: 5

# (optional) if not specified, the delegation certificate fee will be

# the one set by linear_fees.certificate

certificate_stake_delegation: 2

# (optional) if not specified, the owner delegation certificate fee will be

# the one set by linear_fees.certificate. Uncomment to set the owner stake

# delegation to `1` instead of default `4`:

certificate_owner_stake_delegation: 1

# Proposal expiration in epochs

- ProposalExpiration: 68

# The speed to update the KES Key in seconds

- KesUpdateSpeed: 120

# Increase the treasury amount

- TreasuryAdd: 10000

# Set the total reward supply available for monetary creation

- RewardPot: 100000000000000

# Set the treasury parameters, this is the tax type, just as in stake pool

# registration certificate parameters.

#

# When distributing the rewards, the treasury will be first serve as per

# the incentive specification document

- TreasuryParams:

# the fix value the treasury will take from the total reward pot of the epoch

fixed: 1000

# the extra percentage the the treasury will take from the reward pot of the epoch

ratio: "1/10"

# it is possible to add a max bound to the total value the treasury takes

# at each reward distribution. For example, one could cap the treasury tax

# to 10000. Uncomment the following line to apply a max limit:

max_limit: 10000

# set the reward supply consumption. These parameters will define how the

# total_reward_supply is consumed for the stake pool reward

#

# There's fundamentally many potential choices for how rewards are contributed back, and here's two potential valid examples:

#

# Linear formula: constant - ratio * (#epoch after epoch_start / epoch_rate)

# Halving formula: constant * ratio ^ (#epoch after epoch_start / epoch_rate)

- RewardParams:

halving: # or use "linear" for the linear formula

# In the linear formula, it represents the starting point of the contribution

# at #epoch=0, whereas in halving formula is used as starting constant for

# the calculation.

constant: 2

# In the halving formula, an effective value between 0.0 to 1.0 indicates a

# reducing contribution, whereas above 1.0 it indicate an acceleration of contribution.

#

# However in linear formula the meaning is just a scaling factor for the epoch zone

# (current_epoch - start_epoch / epoch_rate). Further requirement is that this ratio

# is expressed in fractional form (e.g. 1/2), which allow calculation in integer form.

ratio: 3/68

# indicates when this contribution start. note that if the epoch is not

# the same or after the epoch_start, the overall contribution is zero.

epoch_start: 89

# the rate at which the contribution is tweaked related to epoch.

epoch_rate: 20

# Fees for different types of certificates, to override the one

# given in `certificate` just above.

- PerCertificateFees:

# (optional) if not specified, the pool registration certificate fee will be

# the one set by linear_fees.certificate

certificate_pool_registration: 5

# (optional) if not specified, the delegation certificate fee will be

# the one set by linear_fees.certificate

certificate_stake_delegation: 2

# (optional) if not specified, the owner delegation certificate fee will be

# the one set by linear_fees.certificate. Uncomment to set the owner stake

# delegation to `1` instead of default `4`:

certificate_owner_stake_delegation: 1

# Set where to send the fees generated by transactions activity.

#

# It is possible to send all the generated fees to the "treasury"

- FeesInTreasury: rewards

- RewardLimitNone

# Limit the epoch total reward drawing limit to a portion of the total

# active stake of the system.

#

# for example, if set to 10%, the reward drawn will be bounded by the

# 10% of the total active stake.

- RewardLimitByAbsoluteStake: 22/72

# Settings to incentivize the numbers of stake pool to be registered

# on the blockchain.

#

# These settings does not prevent more stake pool to be added. For example

# if there is already 1000 stake pools, someone can still register a new

# stake pool and affect the rewards of everyone else too.

#

# if the threshold is reached, the pool doesn't really have incentive to

# create more blocks than 1 / set-value-of-pools % of stake.

- PoolRewardParticipationCapping:

min: 8

max: 52

# Add a new committee id

- AddCommitteeId: 8103973beaa56f4e9440004ee8e8f8359ea18499d4199c1b018c072e7f503ea0

# Remove a committee id

- RemoveCommitteeId: 6375dcdd714e69c197e99c32486ec28f166a50da7a1e3694807cd8a76f1c8175

- PerVoteCertificateFees:

certificate_vote_plan: 52

certificate_vote_cast: 57

# The transaction max expiry epochs

- TransactionMaxExpiryEpochs: 91

Building vote cast certificate

Builds a vote cast certificate.

Public vote cast

jcli certificate new update-cast public \

--choice <choice> \

--proposal-index <proposal-index> \

--vote-plan-id <vote-plan-id> \

--output <output-file>

Where:

- <choice> - the number of choice within the proposal you vote for

- <proposal-index> - the number of proposal in the vote plan you vote for

- <vote-plan-id> - the vote plan identified on the blockchain

- <output-file> - optional write the output to the given file or print it to the standard output if not defined

Private vote cast

jcli certificate new update-cast private \

--choice <choice> \

--options-size <options> \

--proposal-index <proposal-index> \

--vote-plan-id <vote-plan-id> \

--key-path <secret-key>

--output <output-file>

Where:

- <choice> - the number of choice within the proposal you vote for

- <options> - size of voting options

- <proposal-index> - the number of proposal in the vote plan you vote for

- <vote-plan-id> - the vote plan identified on the blockchain

- <secret-key> - optional key to encrypt the vote with, if not provided read secret key from the stdit

- <output-file> - optional write the output to the given file or print it to the standard output if not defined

Genesis

Tooling for working with a genesis file

Usage

jcli genesis [subcommand]

Subcommands

- decode: Print the YAML file corresponding to an encoded genesis block.

- encode: Create the genesis block of the blockchain from a given yaml file.

- hash: Print the block hash of the genesis

- init: Create a default Genesis file with appropriate documentation to help creating the YAML file

- help

Examples

Encode a genesis file

jcli genesis encode --input genesis.yaml --output block-0.bin

or equivantely

cat genesis.yaml | jcli genesis encode > block-0.bin

Get the hash of an encoded genesis file

jcli genesis hash --input block-0.bin

cryptographic keys

There are multiple type of key for multiple use cases.

| type | usage |

|---|---|

ed25519 | Signing algorithm for Ed25519 algorithm |

ed25519-bip32 | Related to the HDWallet, Ed25519 Extended with chain code for derivation |

ed25519-extended | Related to Ed25519Bip32 without the chain code |

sum-ed25519-12 | For stake pool, necessary for the KES |

ristretto-group2-hash-dh | For stake pool, necessary for the VRF |

There is a command line parameter to generate this keys:

$ jcli key generate --type=Ed25519

ed25519_sk1cvac48ddf2rpk9na94nv2zqhj74j0j8a99q33gsqdvalkrz6ar9srnhvmt

and to extract the associated public key:

$ echo ed25519_sk1cvac48ddf2rpk9na94nv2zqhj74j0j8a99q33gsqdvalkrz6ar9srnhvmt | jcli key to-public

ed25519_pk1z2ffur59cq7t806nc9y2g64wa60pg5m6e9cmrhxz9phppaxk5d4sn8nsqg

Signing data

Sign data with private key. Supported key formats are: ed25519, ed25519-bip32, ed25519-extended and sumed25519_12.

jcli key sign <options> <data>

The options are

- –secret-key <secret_key> - path to file with bech32-encoded secret key

- -o, –output <output> - path to file to write signature into, if no value is passed, standard output will be used

<data> - path to file with data to sign, if no value is passed, standard input will be used

Verifying signed data

Verify signed data with public key. Supported key formats are: ed25519, ed25519bip32 and sumed25519_12.

jcli key verify <options> <data>

The options are

- –public-key <public_key> - path to file with bech32-encoded public key

- –signature <signature> - path to file with signature

<data> - path to file with data to sign, if no value is passed, standard input will be used

REST

Jormungandr comes with a CLI client for manual communication with nodes over HTTP.

Conventions

Many CLI commands have common arguments:

-h <addr>or--host <addr>- Node API address. Must always havehttp://orhttps://prefix and always ends with the/api. E.g.-h http://127.0.0.1:8081/api,--host https://node.com:8443/cardano/api.--debug- Print additional debug information to stderr. The output format is intentionally undocumented and unstable--output-format <format>- Format of output data. Possible values: json, yaml, default yaml. Any other value is treated as a custom format using values from output data structure. Syntax is Go text template: https://golang.org/pkg/text/template/.

Node stats

Fetches node stats

jcli rest v0 node stats get <options>

The options are

- -h <node_addr> - see conventions

- –debug - see conventions

- –output-format <format> - see conventions

YAML printed on success

---

# Number of blocks received by node

blockRecvCnt: 1102

# Size in bytes of all transactions in last block

lastBlockContentSize: 484

# The Epoch and slot Number of the block (optional)

lastBlockDate: "20.29"

# Sum of all fee values in all transactions in last block

lastBlockFees: 534

# The block hash, it's unique identifier in the blockchain (optional)

lastBlockHash: b9597b45a402451540e6aabb58f2ee4d65c67953b338e04c52c00aa0886bd1f0

# The block number, in order, since the block0 (optional)

lastBlockHeight: 202901

# Sum of all input values in all transactions in last block

lastBlockSum: 51604

# The time slot of the tip block

lastBlockTime: "2020-01-30T22:37:46+00:00"

# Number of transactions in last block

lastBlockTx: 2

# The time at which we received the last block, not necessarily the current tip block (optional)

lastReceivedBlockTime: "2020-01-30T22:37:59+00:00"

# 24 bytes encoded in hexadecimal Node ID

nodeId: "ad24537cb009bedaebae3d247fecee9e14c57fe942e9bb0d"

# Number of nodes that are available for p2p discovery and events propagation

peerAvailableCnt: 321

# Number of nodes that have been quarantined by our node

peerQuarantinedCnt: 123

# Total number of nodes

peerTotalCnt: 444

# Number of nodes that are connected to ours but that are not publicly reachable

peerUnreachableCnt: 0

# State of the node

state: Running

# Number of transactions received by node

txRecvCnt: 5440

# Node uptime in seconds

uptime: 20032

# Node app version

version: jormungandr 0.8.9-30d20d2e

Get UTxO

Fetches UTxO details

jcli rest v0 utxo <fragment-id> <output-index> get <options>

- <fragment-id> - hex-encoded ID of the transaction fragment

- <output-index> - index of the transaction output

The options are

- -h <node_addr> - see conventions

- –debug - see conventions

- –output-format <format> - see conventions

YAML printed on success

---

# UTxO owner address

address: ca1svs0mwkfky9htpam576mc93mee5709khre8dgnqslj6y3p5f77s5gpgv02w

# UTxO value

value: 10000

Post transaction

Posts a signed, hex-encoded transaction

jcli rest v0 message post <options>

The options are

- -h <node_addr> - see conventions

- –debug - see conventions

- -f –file <file_path> - File containing hex-encoded transaction. If not provided, transaction will be read from stdin.

Fragment Id is printed on success (which can help finding transaction status using get message log command)

50f21ac6bd3f57f231c4bf9c5fff7c45e2529c4dffed68f92410dbf7647541f1

Get message log

Get the node’s logs on the message pool. This will provide information on pending transaction, rejected transaction and or when a transaction has been added in a block

jcli rest v0 message logs <options>

The options are

- -h <node_addr> - see conventions

- –debug - see conventions

- –output-format <format> - see conventions

YAML printed on success

---

- fragment_id: 7db6f91f3c92c0aef7b3dd497e9ea275229d2ab4dba6a1b30ce6b32db9c9c3b2 # hex-encoded fragment ID

last_updated_at: 2019-06-02T16:20:26.201000000Z # RFC3339 timestamp of last fragment status change

received_at: 2019-06-02T16:20:26.201000000Z # RFC3339 timestamp of fragment receivement

received_from: Network, # how fragment was received

status: Pending, # fragment status

received_from can be one of:

received_from: Rest # fragment was received from node's REST API

received_from: Network # fragment was received from the network

status can be one of:

status: Pending # fragment is pending

status:

Rejected: # fragment was rejected

reason: reason of rejection # cause

status: # fragment was included in a block

InABlock:

date: "6637.3" # block epoch and slot ID formed as <epoch>.<slot_id>

block: "d9040ca57e513a36ecd3bb54207dfcd10682200929cad6ada46b521417964174"

Blockchain tip

Retrieves a hex-encoded ID of the blockchain tip

jcli rest v0 tip get <options>

The options are

- -h <node_addr> - see conventions

- –debug - see conventions

Get block

Retrieves a hex-encoded block with given ID

jcli rest v0 block <block_id> get <options>

- <block_id> - hex-encoded block ID

The options are

- -h <node_addr> - see conventions

- –debug - see conventions

Get next block ID

Retrieves a list of hex-encoded IDs of descendants of block with given ID. Every list element is in separate line. The IDs are sorted from closest to farthest.

jcli rest v0 block <block_id> next-id get <options>

- <block_id> - hex-encoded block ID

The options are

- -h <node_addr> - see conventions

- –debug - see conventions

- -c –count <count> - Maximum number of IDs, must be between 1 and 100, default 1

Get account state

Get account state

jcli rest v0 account get <account-id> <options>

- <account-id> - ID of an account, bech32-encoded

The options are

- -h <node_addr> - see conventions

- –debug - see conventions

- –output-format <format> - see conventions

YAML printed on success

---

counter: 1

delegation: c780f14f9782770014d8bcd514b1bc664653d15f73a7158254730c6e1aa9f356

value: 990

valueis the current balance of the account;counteris the number of transactions performed using this account this is useful to know when signing new transactions;delegationis the Stake Pool Identifier the account is delegating to. it is possible this value is not set if there is no delegation certificate sent associated to this account.

Node settings

Fetches node settings

jcli rest v0 settings get <options>

The options are

- -h <node_addr> - see conventions

- –debug - see conventions

- –output-format <format> - see conventions

YAML printed on success

---

block0Hash: 8d94ecfcc9a566f492e6335858db645691f628b012bed4ac2b1338b5690355a7 # block 0 hash of

block0Time: "2019-07-09T12:32:51+00:00" # block 0 creation time of

blockContentMaxSize: 102400 # the block content's max size in bytes

consensusVersion: bft # currently used consensus

currSlotStartTime: "2019-07-09T12:55:11+00:00" # current slot start time

epochStabilityDepth: 102400 # the depth, number of blocks, to which we consider the blockchain to

# be stable and prevent rollback beyond that depth

fees: # transaction fee configuration

certificate: 4 # fee per certificate

coefficient: 1 # fee per every input and output

constant: 2 # fee per transaction

per_certificate_fees: # fee per certificate operations, all zero if this object absent (optional)

certificate_pool_registration: 5 # fee per pool registration, zero if absent (optional)

certificate_stake_delegation: 15 # fee per stake delegation, zero if absent (optional)

certificate_owner_stake_delegation: 2 # fee per pool owner stake delegation, zero if absent (optional)

rewardParams: # parameters for rewards calculation

compoundingRatio: # speed at which reward is reduced. Expressed as numerator/denominator

denominator: 1024

numerator: 1

compoundingType: Linear # reward reduction algorithm. Possible values: "Linear" and "Halvening"

epochRate: 100 # number of epochs between reward reductions

epochStart: 0 # epoch when rewarding starts

initialValue: 10000 # initial reward

slotDuration: 5 # slot duration in seconds

slotsPerEpoch: 720 # number of slots per epoch

treasuryTax: # tax from reward that goes to pot

fixed: 5 # what get subtracted as fixed value

ratio: # ratio of tax after fixed amount is subtracted. Expressed as numerator/denominator

numerator: 1

denominator: 10000

max: 100 # limit of tax (optional)

Node shutdown

Node shutdown

jcli rest v0 shutdown get <options>

The options are

- -h <node_addr> - see conventions

- –debug - see conventions

Get leaders

Fetches list of leader IDs

jcli rest v0 leaders get <options>

The options are

- -h <node_addr> - see conventions

- –debug - see conventions

- –output-format <format> - see conventions

YAML printed on success

---

- 1 # list of leader IDs

- 2

Register leader

Register new leader and get its ID

jcli rest v0 leaders post <options>

The options are

- -h <node_addr> - see conventions

- –debug - see conventions

- –output-format <format> - see conventions

- -f, –file <file> - File containing YAML with leader secret. It must have the same format as secret YAML passed to Jormungandr as –secret. If not provided, YAML will be read from stdin.

On success created leader ID is printed

3

Delete leader

Delete leader with given ID

jcli rest v0 leaders delete <id> <options>

- <id> - ID of deleted leader

The options are

- -h <node_addr> - see conventions

- –debug - see conventions

Get leadership logs

Fetches leadership logs

jcli rest v0 leaders logs get <options>

The options are

- -h <node_addr> - see conventions

- –debug - see conventions

- –output-format <format> - see conventions

YAML printed on success

---

- created_at_time: "2019-08-19T12:25:00.417263555+00:00"

enclave_leader_id: 1

finished_at_time: "2019-08-19T23:19:05.010113333+00:00"

scheduled_at_date: "0.3923"

scheduled_at_time: "2019-08-19T23:18:35+00:00"

wake_at_time: "2019-08-19T23:18:35.001254555+00:00"

status:

Block:

chain_length: 201018

block: d9040ca57e513a36ecd3bb54207dfcd10682200929cad6ada46b521417964174

parent: cc72d4ca957b03d7c795596b7fd7b1ff09c649c3e2877c508c0466abc8604832

Different value for the status:

# meaning the action is still pending to happen

status: Pending

# meaning the action successfully create the given block with the given hash and parent

status:

Block:

chain_length: 201018

block: d9040ca57e513a36ecd3bb54207dfcd10682200929cad6ada46b521417964174

parent: cc72d4ca957b03d7c795596b7fd7b1ff09c649c3e2877c508c0466abc8604832

# meaning the event has failed for some reasons

status:

Rejected:

reason: "Missed the deadline to compute the schedule"

Get stake pools

Fetches list of stake pool IDs

jcli rest v0 stake-pools get <options>

The options are

- -h <node_addr> - see conventions

- –debug - see conventions

- –output-format <format> - see conventions

YAML printed on success

---

- 5cf03f333f37eb7b987dbc9017b8a928287a3d77d086cd93cd9ad05bcba7e60f # list of stake pool IDs

- 3815602c096fcbb91072f419c296c3dfe1f730e0f446a9bd2553145688e75615

Get stake distribution

Fetches stake information

jcli rest v0 stake get <options> [<epoch>]

- <epoch> - Epoch to get the stake distribution from. (optional)

The options are

- -h <node_addr> - see conventions

- –debug - see conventions

- –output-format <format> - see conventions

YAML printed on success

jcli rest v0 stake get <options>- stake distribution from the current epoch

---

epoch: 228 # Epoch of last block

stake:

dangling: 0 # Total value stored in accounts, but assigned to nonexistent pools

pools:

- - 5cf03f333f37eb7b987dbc9017b8a928287a3d77d086cd93cd9ad05bcba7e60f # stake pool ID

- 1000000000000 # staked value

- - 3815602c096fcbb91072f419c296c3dfe1f730e0f446a9bd2553145688e75615 # stake pool ID

- 1000000000000 # staked value

unassigned: 0 # Total value stored in accounts, but not assigned to any pool

jcli rest v0 stake get <options> 10- stake distribution from a specific epoch (epoch 10 in this example)

---

epoch: 10 # Epoch specified in the request

stake:

dangling: 0 # Total value stored in accounts, but assigned to nonexistent pools

pools:

- - 5cf03f333f37eb7b987dbc9017b8a928287a3d77d086cd93cd9ad05bcba7e60f # stake pool ID

- 1000000000000 # staked value

- - 3815602c096fcbb91072f419c296c3dfe1f730e0f446a9bd2553145688e75615 # stake pool ID

- 1000000000000 # staked value

unassigned: 0 # Total value stored in accounts, but not assigned to any pool

Network stats

Fetches network stats

jcli rest v0 network stats get <options>

The options are

- -h <node_addr> - see conventions

- –debug - see conventions

- –output-format <format> - see conventions

YAML printed on success

---

- # node address (optional)

addr: "3.124.55.91:3000"

# hex-encoded node ID

nodeId: 0102030405060708090a0b0c0d0e0f101112131415161718191a1b1c1d1e1f20

# timestamp of when the connection was established

establishedAt: "2019-10-14T06:24:12.010231281+00:00"

# timestamp of last time block was received from node if ever (optional)

lastBlockReceived: "2019-10-14T00:45:57.419496113+00:00"

# timestamp of last time fragment was received from node if ever (optional)

lastFragmentReceived: "2019-10-14T00:45:58.419496150+00:00"

# timestamp of last time gossip was received from node if ever (optional)

lastGossipReceived: "2019-10-14T00:45:59.419496188+00:00"

Get stake pool details

Fetches stake pool details

jcli rest v0 stake-pool get <pool-id> <options>

- <pool-id> - hex-encoded pool ID

The options are

- -h <node_addr> - see conventions

- –debug - see conventions

- –output-format <format> - see conventions

YAML printed on success

---

tax: # pool reward

fixed: 5 # what get subtracted as fixed value

ratio: # ratio of tax after fixed amount is subtracted. Expressed as numerator/denominator

numerator: 1

denominator: 10000

max: 100 # limit of tax (optional)

total_stake: 2000000000000 # total stake pool value

# bech32-encoded stake pool KES key

kesPublicKey: kes25519-12-pk1q7susucqwje0lpetqzjgzncgcrjzx7e2guh900qszdjskkeyqpusf3p39r

# bech32-encoded stake pool VRF key

vrfPublicKey: vrf_pk1rcm4qm3q9dtwq22x9a4avnan7a3k987zvepuxwekzj3uyu6a8v0s6sdy0l

Get rewards history for a specific epoch

Get the rewards history of a given epoch.

jcli rest v0 rewards epoch get <epoch> <options>

- <epoch> - epoch number to get the rewards history for.

The options are

- -h <node_addr> - see conventions

- –debug - see conventions

jcli rest v0 rewards epoch get 82 -h <node_addr>

[

{

"epoch": 82, // the epoch number to collect rewards info from (rewards are from epoch 81)

"drawn": 3835616440000, // Total Drawn from reward escrow pot for the epoch

"fees": 1828810000, // Fees contributed into the pot the epoch

"treasury": 462179124139, // Value added to the treasury

"stake_pools": {

"0087011b9c626759f19d9d0315a9b42492ba497438c12efc026d664c9f324ecb": [

1683091391, // pool's owned rewards from taxes

32665712521 // distributed rewards to delegators

],

"014bb0d84f40900f6dd85835395bc38da3ab81435d1e6ee27d419d6eeaf7d16a": [

47706672,

906426770

],

},

"accounts": {

"ed25519_pk1qqq6r7r7medu2kdpvdra5kwh8uz9frvftm9lf25shm7ygx9ayvss0nqke9": 427549785, // Amount added to each account

"ed25519_pk1qqymlwehsztpzhy2k4szkp7j0xk0ra35jyxcpgr9p9q4ngvzzc5q4sh2gm": 24399360,

"ed25519_pk1qq9h62jv6a0mz36xgecjrz9tm8z6ay3vj4d64ashxkgxcyhjewwsvgvelj": 22449169,

"ed25519_pk1qq9l2qrqazk5fp4kt2kvjtsjc32g0ud888um8k2pvms0cw2r0uzsute83u": 1787992,

"ed25519_pk1qqx6h559ee7pa67dm255d0meekt6dmq6857x302wdwrhzv47z9hqucdnt2": 369024,

}

}

]

Get rewards history for some epochs

Get the rewards history of the length last epoch(s) from tip.

jcli rest v0 rewards history get <length> <options>

- <length> - number of epochs, starting from the last epoch from tip, to get the reward history for.

The options are

- -h <node_addr> - see conventions

- –debug - see conventions

jcli rest v0 rewards history get 2 -h <node_addr>

[

{

"epoch": 93,

"drawn": 3835616440000,

"fees": 641300000,

"treasury": 467151470296,

"stake_pools": {

"0087011b9c626759f19d9d0315a9b42492ba497438c12efc026d664c9f324ecb": [

1121750881,

21771124247

],

"014bb0d84f40900f6dd85835395bc38da3ab81435d1e6ee27d419d6eeaf7d16a": [

429241408,

8155586765

],

"01bd272cede02d0b0c9cd47b16e5356ab3fb2330dd9d1e972ab5494365309d2a": [

1691506850,

32829041110

],

},

"accounts": {